Overview

As a developer, you’ll find yourself dealing with asynchronous functions at least once in your career. In this post, I’ll show you what the term means and what it means for Golang’s strengths in handling concurrency through code.

What is Thread Safe?

In multithreaded programming, it generally means that a function, variable, or object can be accessed simultaneously from multiple threads without causing problems in the program’s execution. More strictly, it is defined as when a function is called and executing from one thread, and another thread calls the function and executes it simultaneously, the result of the function’s execution in each thread is correct, even if they are executing together. (See wiki documentation )

It can be complicated to understand, but in a nutshell, ensuring that two concurrently executed tasks do what they’re supposed to do is called Thread Safe. There are so many different ways to do this, and so many different situations where it can go wrong, that whenever I have to do concurrency programming, I remember running a simulation in my head repeatedly to see if my code is Thread Safe.

Things that can happen if you don’t make it Thread Safe

Let’s look at a real-life example of what can happen with concurrency issues.

- You and your friend try to buy tickets to a concert, but both of you succeed, so you end up with 4 tickets (you originally intended to buy 2) → DATA INTEGRITY ISSUE.

- Two people tried to log in with the same account at the same time and kept logging each other out (only one person could log in) → Deadlocks.

- In a panic, the baggage screening system could only do one thing at a time and could not process properly when customers pushed their luggage through the scanner at the same time → RACE CONDITION.

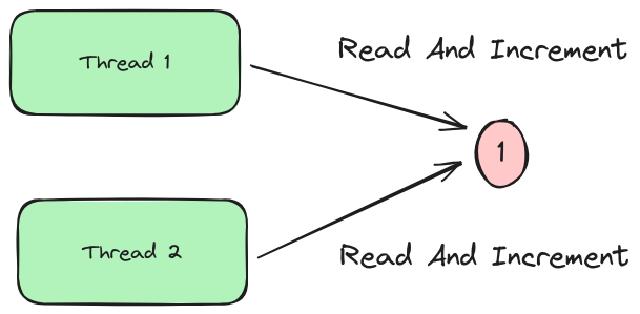

If you have logic that accesses, reads, adds, and allocates a shared resource at the same time, as shown in the image above, there is a high probability that your programming will behave differently than intended because the user’s intent was to add, but the user saw a value of 1 at the time of the read.

Writing Thread Safe Code

This part will vary depending on your development needs, but when programming for concurrency, you should think about whether you have any of the following issues

- Data integrity issues (data integrity)

- Deadlocks (deadlocks)

- Data races (race condition)

To solve these problems, you can consider the following method

- Synchronize data access via locks

- Use atomic operations

- Utilize immutable data structures

- Utilize testing and analysis tools

Thread Safe in Golang

Golang uses lightweight threads called goroutines to write asynchronous programs. Traditionally, a lot of communication between threads is done through shared memory, but we also use locks and queues to control messages. This complicates the flow of code and makes it difficult to manage over time.

In Golang, we utilize Channels to solve this problem.

Do not communicate by sharing memory; instead, share memory by communicating

See: https://go.dev/blog/codelab-share

Channel Examples

Let’s write an example of a channel in a case-by-case manner. First, we have 5 workers and each worker increments a counter by 1 in a loop of 1000 times.

1package main

2

3import (

4 "fmt"

5 "sync"

6)

7

8func main() {

9 const numWorkers = 10

10 const numNumbers = 10000

11

12 // Create a channel

13 numberChannel := make(chan int)

14 sumChannel := make(chan int)

15 totalSum := 0

16

17 // Use WaitGroup to wait for all goroutines to complete their work

18 var wg sync.WaitGroup

19

20 // Start the addition goroutines

21 for i := 0; i < numWorkers; i++ {

22 wg.Add(1)

23 go func(id int) {

24 defer wg.Done()

25 sum := 0

26 for num := range numberChannel {

27 sum += num

28 }

29

30 sumChannel <- sum

31 fmt.Printf("Goroutine %d: sum = %d\n", id, sum)

32 }(i)

33 }

34

35 wg.Add(1)

36 go func() {

37 defer wg.Done()

38

39 workerDoneCount := 0

40 for sum := range sumChannel {

41 totalSum += sum

42

43 workerDoneCount++

44 if workerDoneCount == numWorkers {

45 close(sumChannel)

46 }

47 }

48 }()

49

50 // Send a number

51 for i := 1; i <= numNumbers; i++ {

52 numberChannel <- i

53 }

54

55 // all goroutines complete their work and close the channel

56 close(numberChannel)

57

58 // wait for all goroutines to finish their work

59 wg.Wait()

60

61 fmt.Printf("Total Total ==> %d\n", totalSum)

62}

The flow

- each goroutine receives numbers from the

numberChannelchannel, accumulates them, and computes a sum. - Once the sum is calculated, the goroutine sends the sum through the

sumChannelchannel. - A separate goroutine receives the sum from the

sumChannelchannel and calculates the grand total. - When all the goroutines have finished, they wait using

sync.WaitGroup, and the main goroutine outputs the total

By using channels to control execution over a shared resource, a shared total, for tasks that are handled asynchronously to each other, and synchronization and channels to control execution, we can safely and intentionally perform tasks as intended.

But channels have their limitations. Depending on the pattern of the channel, there may be situations where it is difficult to control the order, and it is necessary to use locks or atomic operations to prevent data races.

Using atomic operations

In Golang, most operations are not atomic by default. For example, the counter++ operation is not atomic because it reads and increments data. To achieve this, we need to use functions from the sync/atomic package provided by golang.

1package main

2

3import (

4 "fmt"

5 "sync"

6 "sync/atomic"

7)

8

9func main() {

10 var counter int64

11 var wg sync.WaitGroup

12

13 for i := 0; i < 1000; i++ {

14 wg.Add(1)

15 go func() {

16 atomic.AddInt64(&counter, 1)

17 wg.Done()

18 }()

19 }

20

21 wg.Wait()

22 fmt.Println("Counter:", counter)

23}

We use the atomic.AddInt64 function to ensure this atomicity. This allows us to output a value of 1000 as intended, even if multiple goroutines are running at the same time.

Using Locks

In Golang, you can also declare locks to control access to shared resources. In this case, we won’t use the functions from the sync/atomic package that we used in the example above because they are controlled via locks.

1package main

2

3import (

4 "fmt"

5 "sync"

6)

7

8func main() {

9 var counter int64

10 var mu sync.Mutex // declare a mutex

11

12 var wg sync.WaitGroup

13

14 for i := 0; i < 1000; i++ {

15 wg.Add(1)

16 go func() {

17 mu.Lock() // get a mutex lock

18 counter++ // increment shared variable

19 mu.Unlock() // unlock the mutex lock

20 wg.Done()

21 }()

22 }

23

24 wg.Wait()

25 fmt.Println("Counter:", counter)

26}

The result is the same as above, and you can see that counter is 1000 as intended.

Detailed debugging with the -race option

Golang has the -race option to report if there are concurrent reads or writes to memory. If a data race condition is detected in your code, it will report like below.

1go run -race main.go

2

3==================

4WARNING: DATA RACE

5Read at 0x00c000014108 by goroutine 8:

6 main.main.func1()

7 /path/to/yourprog.go:25 +0x3e

8

9Previous write at 0x00c000014108 by goroutine 7:

10 main.updateData()

11 /path/to/yourprog.go:15 +0x2f

12

13Goroutine 8 (running) created at:

14 main.main()

15 /path/to/yourprog.go:23 +0x86

16

17Goroutine 7 (finished) created at:

18 main.main()

19 /path/to/yourprog.go:22 +0x68

20==================

Summary

In this post, I explained what Thread Safe is, the situations where it can go wrong, and what you need to consider to prevent it.

I wrote an example of a channel through Golang code and examples of cases and examples that you can consider for thread-safe code, and I hope this helps you understand what you need to consider for thread-safe code.